Introduction

Explainable AI (XAI) is an evolving field that seeks to demystify the inner workings of artificial intelligence models. In essence, XAI aims to make AI decisions transparent and understandable to humans. This is particularly crucial in design, where decisions can significantly impact user experiences and business outcomes.

As AI plays an increasingly prominent role in shaping products and services, it becomes unavoidable to understand how these systems arrive at their conclusions. Without transparency, trust in AI-driven design is difficult to establish, and the potential for errors and biases goes unchecked.

Thus, Explainable AI is not merely an option but a necessity in the AI UX design process. This comprehensive article explores the significance of XAI in design, discussing its benefits, challenges, and best practices. Let's dive in!

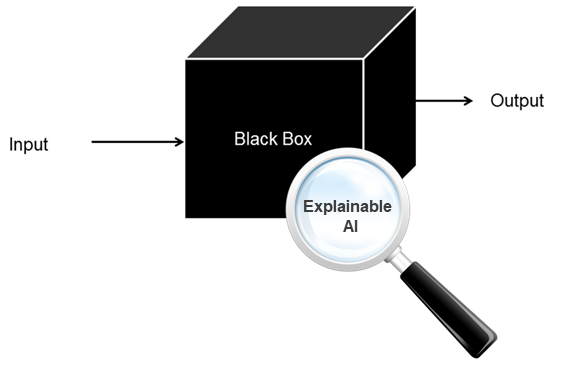

The Black Box Problem in AI Design

At the heart of many AI systems lie complex algorithms often referred to as "black boxes." These models are adept at processing vast amounts of data and producing accurate outputs, but their internal workings remain opaque to human understanding.

While this approach has yielded impressive results in various fields, it poses significant setbacks in design, where human intuition and empathy play crucial roles.

The Challenges of Understanding AI Decision-Making Processes

Unraveling the intricacies of AI decision-making is akin to deciphering a complex puzzle. The complexity of these models, coupled with the vastness of the data they process, makes it incredibly difficult to comprehend how they arrive at specific outputs. This lack of transparency hinders our ability to identify errors, biases, or unintended consequences in the design process.

The Risks of Opaque AI Systems in Design

If an AI system generates a suboptimal or even harmful design, it's challenging to pinpoint the root cause without understanding its decision-making process. This may lead to costly mistakes, reputational damage, and a loss of trust in AI-driven design solutions. Moreover, without transparency, it's difficult to ensure that AI systems align with ethical principles and avoid perpetuating biases.

Why We Need XAI

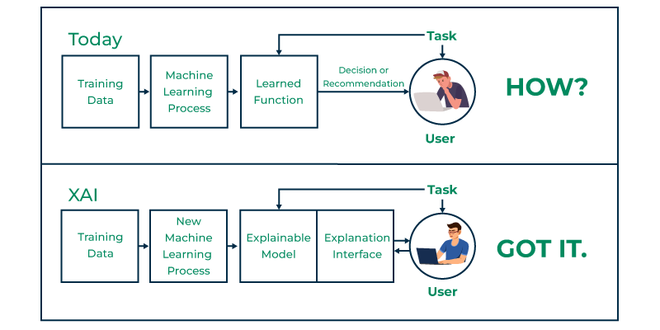

To address the limitations of black-box models, the concept of Explainable AI (XAI) emerged. XAI aims to shed light on the decision-making processes of AI systems, making them more understandable and accountable.

By providing insights into how AI arrives at its conclusions, XAI can help designers build trust, identify errors, and ensure that AI-driven designs align with human values and expectations.

The Benefits of Explainable AI in Design

Explainable AI offers numerous advantages for the UX design process, let's cover them in this section.

#1. Enhanced User Experience

Understanding the reasoning behind AI-driven recommendations or actions leads to a smoother and more intuitive user experience. When users comprehend why a certain path was chosen or a decision made by AI, they are more likely to feel in control of their interactions and perceive the system as responsive to their needs.

#2. Enhanced Trust and User Acceptance

Transparency is fundamental to building trust. When users understand how AI systems arrive at their decisions, they are more likely to accept and rely on AI-driven designs. XAI can help bridge the gap between humans and machines, promoting a sense of confidence in the technology.

#3. Improved Decision-Making and Problem-Solving

By understanding the reasoning behind AI-generated designs, designers can make more informed decisions. XAI can help identify strengths and weaknesses in AI models, leading to refinements and optimizations. Additionally, designers can better collaborate with stakeholders if they explain the decision-making process, gaining buy-in for design choices.

#4. Identification and Mitigation of Bias

AI systems can inherit biases present in the data they are trained on. XAI can help uncover these biases by providing insights into the factors influencing AI decisions. With this knowledge, designers can take steps to mitigate bias and ensure fairness in design outcomes.

#5. Compliance with Regulations and Ethical Standards

Many industries are subject to regulations that require transparency and accountability. XAI can help organizations comply with these requirements by providing clear explanations of AI-driven decisions. Moreover, XAI aligns with ethical principles by promoting fairness, transparency, and human oversight in the design process.

Overview of Different XAI Techniques

Explainable AI offers a range of techniques to demystify complex AI models. Armed with an understanding of these methods, designers can gain valuable insights into the decision-making processes of AI systems.

Model-Agnostic Methods

Model-agnostic methods focus on understanding and explaining the predictions of any machine learning model, regardless of its complexity. Common methods include:

LIME (Local Interpretable Model-Agnostic Explanations): Peeling Back the Layers

LIME simplifies complex AI models into something humans can understand. Imagine trying to learn a foreign language; LIME provides a basic dictionary to help you grasp the main points. It focuses on specific predictions, breaking down the model's decision-making process for that particular instance.

SHAP (SHapley Additive exPlanations): Weighing the Factors

SHAP assigns importance to different pieces of information used by the AI model. It determines which factors had the biggest impact on the final decision. Think of it as understanding why a judge made a particular ruling by evaluating the significance of each piece of evidence.

Interpretable Models

Interpretable models are designed with transparency in mind, making their decisions easier to understand:

Rule-Based Explanations: The Rulebook

Rule-based explanations are akin to having a clear set of instructions. They provide simple, if-then statements that mimic the AI's decision-making process. It's like understanding a recipe; you follow the steps to achieve the final outcome.

Decision Trees: The Family Tree of Decisions

Decision trees visualize the AI's thinking as a branching structure. It's like mapping out different choices and their consequences. By following the branches, you can see how the AI reached its conclusion.

Counterfactual Explanations: The What-If Scenarios

Counterfactual explanations explore alternative realities. They show you what would happen if you changed the input data. It's like asking, "What if this had been different?" to understand how the AI's decision would change.

Case Studies of XAI Implementations

In healthcare, XAI has been instrumental in explaining the rationale behind AI-driven medical image analysis, building trust between doctors and technology. Similarly, in finance, XAI has helped explain credit decisions, increasing transparency and fairness.

In UX Design

The underlying principles of XAI remain applicable regardless of industry. An e-commerce platform could use LIME to understand why certain product recommendations are successful, leading to improved personalization. Or, a UX designer could employ SHAP to identify which design elements contribute most to user satisfaction.

XAI techniques can help explain why certain design elements are more effective than others. By understanding the reasoning behind user preferences, designers can create more user-centric experiences.

For example, an AI-powered recommendation system could use XAI to explain why a specific product recommendation was made, helping to refine the system and improve user satisfaction.

Th!nkPricing App

Our UX design agency prioritized user understanding when designing Th!nkpricing. Using Explainable AI (XAI) principles, we demystified the AI's pricing suggestions. Users gained clarity on how the AI algorithm arrived at its recommendations and learnt to trust the system.

This transparency, combined with an intuitive interface, helped position Th!nkpricing as a reliable pricing optimization tool.

See the full case study here.

Challenges and Limitations of XAI Methods

Despite its promise, XAI is not without its challenges. For instance, while some XAI methods provide easily understandable explanations, they might sacrifice predictive accuracy. Challenges of XAI Methods:

Trade-off between simplicity and accuracy: Some XAI methods sacrifice accuracy for easier understanding.

Complexity of explanation: Explaining complex models to non-technical audiences can be challenging.

Focus on local explanations: Many XAI techniques provide insights into specific predictions but might not fully capture the model's overall behavior.

Dependence on model and data: The effectiveness of XAI methods can vary based on the underlying AI model and the data it was trained on.

Design Principles for Explainable AI

Let's consider key UX design principles that promote explainable AI. We will also look at methods for integrating XAI into the iterative design process.

UX Design Principles Promoting Transparency and Accountability

Here are UX design guidelines to follow when implementing XAI:

Clarity and Simplicity: Design interfaces that present AI outputs and explanations in a clear and understandable manner. Use plain language and visual aids to convey complex AI decisions to users effectively.

Contextual Relevance: Provide explanations that are relevant to the user's current context and task. Tailor the depth and detail of explanations based on the user's familiarity with AI concepts.

User Control: Empower users with tools to interact with AI models, such as adjusting input variables or exploring alternative scenarios. This fosters a sense of control and understanding over AI-driven decisions.

Feedback Mechanisms: Implement feedback loops where users can provide input on the usefulness and comprehensibility of AI explanations. This helps refine explanations based on user needs and expectations.

How to Integrate XAI into the Iterative Design Process

Involving users in the design of AI explanations is compulsory for creating transparent and user-friendly AI systems. Designers must also implement robust feedback mechanisms. Here are the steps to effectively achieve that:

1. Involving Users in the Design of AI Explanations

a. Start With User Research and Needs Assessment:

Understanding User Expectations: Conduct qualitative research to identify user expectations, concerns, and comprehension levels regarding AI explanations.

Persona Development: Create user personas that represent different user demographics and their specific needs related to AI interaction and transparency.

b. Co-design Workshops and Iterative Prototyping

Collaborative Design Sessions: Engage users, UX designers, and AI developers in co-design workshops to brainstorm and prototype AI explanation interfaces.

Iterative Prototyping: Develop multiple iterations of AI explanations based on user feedback, focusing on clarity, relevance, and ease of understanding.

2. User Testing and Feedback Mechanisms for XAI

a. Prototyping AI Explanations

Interactive Prototypes: Create interactive prototypes that allow users to interact with AI explanations in simulated scenarios.

Usability Testing: Conduct usability testing sessions to observe how users interact with AI explanations in real-time and gather qualitative feedback.

b. Continuous Feedback Loops

Feedback Collection Tools: Implement tools such as feedback forms, surveys, and user ratings within AI applications to collect continuous feedback on the effectiveness of explanations.

Analytics and User Behavior Monitoring: Use analytics to track user interactions with AI explanations and identify areas for improvement based on user behavior patterns.

The Future of Explainable AI in Design

As AI becomes increasingly integrated into the design process, the demand for transparent and accountable systems will only grow. The field of Explainable AI is rapidly evolving, with new techniques and applications emerging continuously.

Emerging Trends and Technologies in XAI

Advancements in natural language processing and human-computer interaction will play a crucial role in shaping the future of XAI. Developing intuitive interfaces to communicate complex explanations will be essential. Additionally, research into combining multiple XAI techniques to create more comprehensive explanations is a promising area.

How Will XAI Impact the Design Industry?

XAI has the potential to revolutionize the design industry by promoting trust, improving decision-making, and enhancing collaboration between humans and AI. As designers gain a deeper understanding of AI systems, they can create more innovative and user-centric products. Moreover, XAI can help democratize design by making it more accessible to individuals without extensive design expertise.

Ongoing Education and Training is Important

To fully realize the potential of XAI, designers, developers, and other stakeholders need ongoing education and training. Understanding the principles of XAI and how to apply them effectively is crucial. Additionally, promoting a culture of transparency and accountability within organizations will help build trust in AI-driven design systems.

Conclusion

Explainable AI (XAI) is essential for bridging the gap between complex AI models and human understanding. By providing transparency into the decision-making processes of AI systems, XAI builds trust, improves decision-making, and mitigates ethical risks. While challenges persist, the benefits of XAI in design are undeniable.

Thus, collaborating with an AI design agency that understands and implements XAI will help you to fully realize the potential of AI in product design.

As AI continues to evolve, so too must our commitment to transparency and accountability. Through XAI, we can ensure that AI is a force for good.

FAQs

What is Explainable AI (XAI)?

Explainable AI (XAI) is about making AI decisions understandable to humans. It helps us understand how AI arrives at its conclusions, building trust and ensuring ethical use in design.

Why is XAI important in design?

XAI helps designers understand why AI makes certain decisions, improving problem-solving and decision-making. It also ensures designs are fair, unbiased, and compliant with regulations.

How can designers implement XAI?

Designers should collaborate with data scientists to understand XAI techniques. They should prioritize transparency, use XAI tools to analyze designs, and continually educate themselves on XAI advancements.