Introduction

Artificial intelligence (AI) is quickly changing the way we understand and design user experiences. AI-powered tools lead to more efficient research processes by automating tedious tasks and handling incredible amounts of data with ease. That said, the rapid advancement of AI is raising significant ethical concerns that must be carefully considered to ensure responsible and equitable practices.

In this article, we will explore the key ethical considerations surrounding the use of AI in UX research. We will explore issues such as bias, privacy, transparency, and accountability, and discuss strategies for mitigating these challenges.

By understanding the ethical implications of AI in UX research, designers can harness the power of this technology while protecting the rights and interests of users.

The Benefits of AI in UX Research

AI tools can significantly streamline data collection, analysis, and insight generation. This enhanced efficiency allows UX researchers to gather and analyze larger datasets more quickly, leading to faster and more informed decision-making.

Let's consider more closely how this is beneficial to the UX design process.

Enhanced Efficiency and Scalability

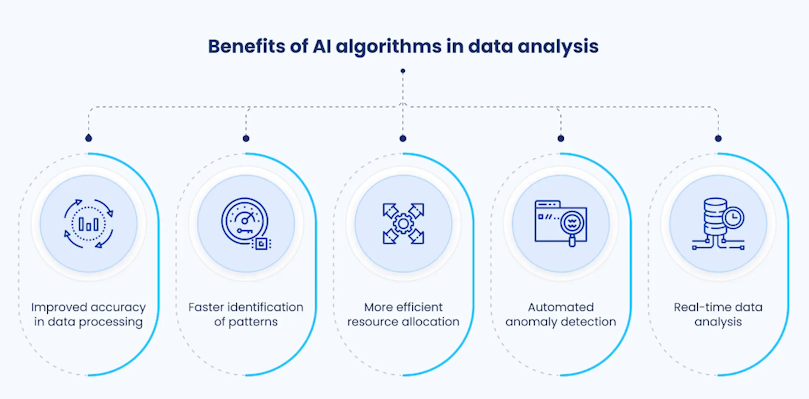

One of the most significant advantages of AI in UX research is its ability to handle data. Traditional research methods often involve manual data entry, analysis, and interpretation, which can be time-consuming and prone to errors. AI-powered tools can automate these tasks, freeing up researchers to focus on higher-value activities.

For example, AI-driven research tools can automatically extract data from various sources, such as websites, social media platforms, and customer support interactions. This eliminates the need for manual data entry and ensures that data is collected consistently and accurately.

But it doesn’t end there, AI algorithms can also analyze large datasets quickly and efficiently, identifying patterns and trends that may not be apparent to human researchers.

Improved Accuracy and Objectivity

Human biases can often influence the interpretation of research data, leading to inaccurate or biased conclusions. AI algorithms, on the other hand, can be designed to avoid these biases and process data objectively. This may lead to more accurate and reliable insights.

For instance, AI-powered tools are able to identify and correct biases in survey data, such as social desirability bias or response set bias. This will ensure that the data collected is representative of the target population and can be used to make informed decisions.

New Opportunities for Innovation

AI also enables novel approaches to UX research that were previously impractical or impossible. One such approach is personalized experiences. By analyzing individual user behavior and preferences, AI can create customized recommendations and content. Personalization can enhance user satisfaction and engagement, leading to increased loyalty and revenue.

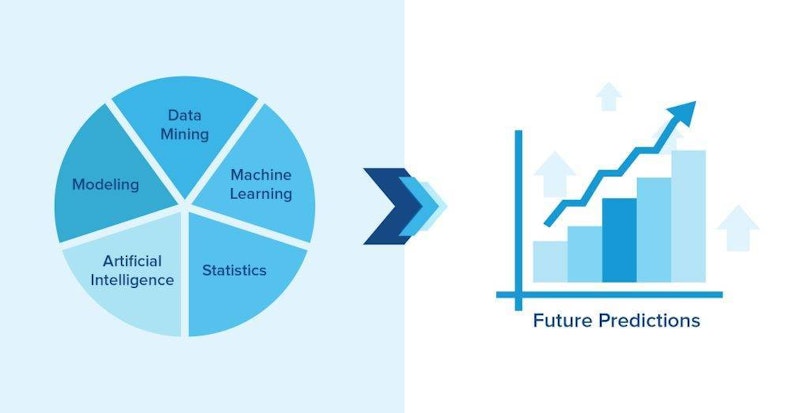

Another area where AI can drive innovation is predictive analytics. Utilizing historical data and trends, AI algorithms can predict future user behavior and preferences. With this information, designers can proactively address potential issues and optimize the user experience.

For example, using AI, we can predict which users are likely to churn and identify the factors contributing to churn. This will allow organizations to take steps to retain these customers and improve overall customer satisfaction.

Ethical Challenges of AI in UX Research

As previously mentioned, AI also raises significant ethical concerns that must be carefully addressed. Two of the most pressing challenges are the risk of AI perpetuating or amplifying existing biases present in data or algorithms and data privacy.

Bias and Discrimination

AI algorithms are trained on large datasets, and if these datasets contain biases, the AI may learn to replicate those biases. For example, if an AI system is trained on data that disproportionately represents a certain demographic group, it may be more likely to make biased predictions or recommendations. This can lead to discrimination and unfair treatment of certain users.

To mitigate the risk of bias, it is important to use diverse and representative datasets when training AI models.

Additionally, regular audits should be conducted to identify and address any biases that may have crept into the system.

Privacy and Data Security

The use of AI in UX research involves the collection and analysis of large amounts of user data. Expectedly, this raises concerns about privacy and data security. Sensitive user information, such as personal preferences, browsing history, and location data, may be collected and stored by AI-powered tools.

To protect user privacy, organizations must implement strong data security measures, including encryption and access controls. Additionally, users should be informed about the data that is being collected and how it will be used. This is where clear privacy policies and informed consent procedures come in.

Transparency and Explainability

AI algorithms can often be complex and opaque, making it difficult to understand how they arrive at their decisions. This can lead to what is known as the "black box" problem, where it is difficult to explain or justify the AI's outputs.

AI explainability and transparency are essential for ensuring ethical and responsible usage. By understanding how AI algorithms work, researchers and organizations can identify and address any biases or errors.

Techniques for increasing transparency include providing explanations for AI-generated recommendations and making the underlying algorithms more accessible to scrutiny.

Accountability and Responsibility

Researchers and organizations have an ethical obligation to ensure that AI is used responsibly. This includes taking steps to mitigate the risks of bias, discrimination, and privacy violations.

To promote accountability, organizations must develop ethical guidelines for the use of AI and establish oversight committees to monitor compliance. Additionally, legal frameworks can be put in place to regulate the use of AI and hold organizations accountable for any harm caused.

Case Studies and Best Practices

Examples of Successful AI Applications in UX Research

AI has been successfully applied in various UX research projects, demonstrating its potential to improve efficiency, accuracy, and innovation. For example, MacDonald uses Sentiment Analysis to analyze customer support interactions and identify common pain points. This enables them to make improvements to their products and services.

Amazon and Netflix use AI to personalize product recommendations for their customers based on their browsing history and purchase behavior. This leads to increased customer satisfaction and sales.

Best Practices for Ethical AI in UX Research

To ensure that AI is used ethically and responsibly in UX research, organizations should follow these best practices:

Conduct thorough ethical assessments: Before deploying AI systems, organizations should conduct a thorough ethical assessment to identify potential risks and mitigate them.

Involve diverse stakeholders: Diverse stakeholders, including users, researchers, and ethicists, should be involved in the development and deployment of AI systems. This helps to ensure that the AI is designed and used in an ethical manner.

Regularly monitor and evaluate: The ethical implications of AI use should be regularly monitored and evaluated. This allows organizations to identify and address any emerging issues.

If organizations can follow these best practices, they will use AI productively while protecting the rights of users.

Future Directions and Emerging Ethical Issues

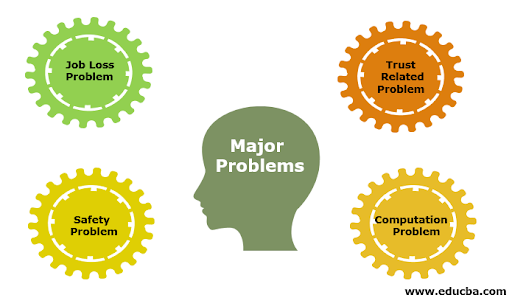

As AI continues to advance, we must anticipate and address emerging ethical challenges. Failure to do so could have significant negative consequences for individuals, organizations, and society as a whole.

Emerging Ethical Concerns

One of the most pressing ethical concerns related to AI is the potential for job displacement. As AI becomes more capable of performing tasks traditionally done by humans, there is a risk that jobs may be lost or transformed. This could have significant economic and social implications.

Another emerging ethical concern is the potential for AI to be used for malicious purposes. For example, AI could be used to create deepfakes or spread misinformation. This could have serious consequences for individuals and organizations, as well as for society as a whole.

The Role of Human Oversight

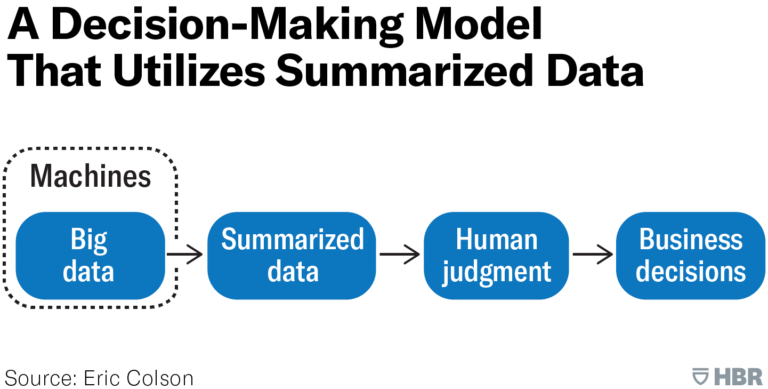

To mitigate these risks, humans must maintain oversight over AI-driven systems. Human oversight can help to ensure that AI is used ethically and responsibly. For example, humans can be responsible for setting ethical guidelines, monitoring AI systems for bias, and intervening when necessary.

Also, human oversight can help to prevent AI from being used for malicious purposes. By understanding how AI works, we can identify and address potential vulnerabilities.

The Need for Collaborative Solutions

Addressing the ethical challenges of AI requires a collaborative effort. Researchers, policymakers, and industry leaders must work together to develop and implement ethical guidelines, regulations, and best practices.

Researchers can play an important role in developing AI systems that are ethical and responsible.

They can also conduct research to identify and address emerging ethical challenges.

Policymakers can develop laws and regulations to govern the use of AI.

Industry leaders can implement ethical guidelines and best practices within their organizations.

Conclusion

We cannot deny the obvious benefits of the integration of AI into UX research. But to ensure that AI is used responsibly and equitably, researchers and organizations must carefully consider the ethical implications.

That way, we can harness the power of this technology while protecting the interests of users. Organizations need to apply the measures we've listed here throughout the development and deployment of AI systems.

And while AI presents potential risks, we cannot overlook the tremendous opportunities for positive social impact. By using AI responsibly, we will create a future where AI enhances user experiences and improves people's lives.

FAQs

How can AI bias be mitigated in UX research?

Mitigating AI bias in UX research involves using diverse datasets, regularly auditing AI models, and involving diverse stakeholders in the development and deployment of AI systems. Additionally, techniques like adversarial training and fairness metrics can help identify and address biases.

What are the privacy implications of using AI in UX research?

Using AI in UX research raises concerns about data privacy and security. Organizations must implement strong data security measures, obtain informed consent from users, and adhere to relevant privacy regulations. Anonymization and encryption can also help protect user data.

How can organizations ensure transparency and explainability in AI-driven UX research?

Transparency and explainability are essential for ethical AI use. Organizations can increase transparency by providing explanations for AI-generated recommendations, making the underlying algorithms more accessible, and involving users in the development process. Additionally, techniques like feature attribution and model visualization can help explain AI decisions.